Introducing The Model Router

Route each query to the best LLM with the model router. Higher performance and lower cost than any individual provider. See the results for yourself.

Guaranteed uptime

Your customers can't afford for you to go down. At Martian, we make sure you stay up by automatically rerouting to other providers when a model or provider goes down. Customers never experience downtime and the backup models still offer high performance.

Jan to Nov: Users experienced downtime on 46 days, (14.4% of total days)

Outperforms GPT-4

We beat GPT-4 on the very tasks which OpenAI evaluates its own performance on. You wouldn’t send an HR problem to your finance department.

So, why do the same with LLMs? With Martian’s multiple LLM router, you can have better performance for specific tasks, while utilizing the power of all LLMs.

With a team of models, your organization can achieve higher accuracy at a lower cost than with a single model

Achieve higher performance and lower cost than any individual model.

Future proof your AI integrations

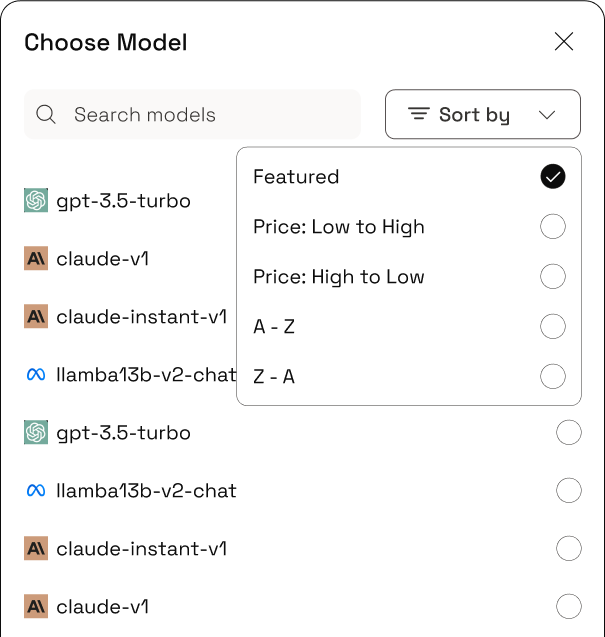

Stay updated on the latest new models as they’re auto-integrated into our routing system. We add all of the latest models right when they are released, so there’s no need to constantly scramble to update.

We're fortunate to be supported by the world's best VCs.